Who Is Responsible Anyway? – Defining research accountability of researchers and institutions in the AI era

Should we be worried about research ethics in the AI era or has it been at risk even before its advent? The question of research ethics is not new; it has its roots in traditional scientific and technological advancements. Even with the stringent quality control measures, a few recent incidents have shaken the core belief in academia’s commitment to upholding ethical standards above all. For example, the abrupt resignation of Marc Tessier-Lavigne as the president of Stanford University following an inquiry into irregularities in his past research has sparked more than a mere administrative shakeup. The incident resonates as a significant moment that goes beyond the confines of Stanford’s ivory towers and signals us to delve deeper into the need for accountability in research integrity and ethics.

The Stanford news is only one amongst the many in the last few months. Another case that came to light was that of Francesca Gino, a researcher at Harvard University, who finds herself in a legal dispute with both Harvard and the scientists who raised concerns regarding data manipulation in the recently retracted publications. Additionally, Duke University is in the process of investigating Daniel Ariely, a James B. Duke Professor of Psychology and Behavioral Economics at the university, while Johns Hopkins University may initiate an investigation of research misconduct by Nobel laureate Gregg Semenza.

While all these incidents are both shocking and unfortunate, the Stanford incident stands out in particular for highlighting the power dynamics within academia. It demonstrates the conventional authoritative privilege of established researchers while the graduate students and postdoctoral researchers who play a key role in the entire research process are often overlooked or placed in precarious position of ensuring research integrity while dealing with the pressure of “publish or perish”.

So Who’s Really Responsible When Things Go Awry?

In the Stanford University situation, several news articles reported a mix of opinions, each showing a different perception of stakeholder accountability and responsibility in this matter. The students firmly stood ground that since the faculty members benefit from the research performed in their labs, they should be the ones most responsible if something goes wrong with the research. The professors though agreeing with this approach, highlighted the importance of working together as a team and establishing an underlying value of trust between all parties. They underlined the need for supervision along with the freedom to give space for new ideas to grow. They also talked about walking a tightrope between making sure research is on track and letting creativity and teamwork thrive.

What emerges from this discourse is a clear disconnect between university administrators, researchers and graduate students. Tessier-Levigne was held accountable for delayed action in reporting the data errors and not adequately implementing counter-measures. Should the inquiry have also considered his potential contribution to cultivating or perpetuating the culture of publish or perish in his lab?

The academic struggle is a well-known phenomenon and ignoring its existence in the hallowed halls of an ivy-league institution would be disingenuous. Equipping one’s students with the skills to deal with a stressful and competitive environment is one of the basic tenets of mentorship professors are expected to provide, and yet, the latest news shows us that it is perhaps of low importance in such matters. The lack of assigned responsibility on the part of the university is also very evident from the current conversation. To avoid the negative impact that this can have on the overall research environment and reputation of everyone associated, universities must take proactive steps to create an environment that supports the well-being and success of the academic community. H. Holden Throp, editor-in-chief of Science journal, labelled it as “sluggish responses” on parts of these bureaucratic cogs that reinforce the losing trust in science from within and outside of the academic community.

Now Let’s Talk About the Impact of AI in This Scenario

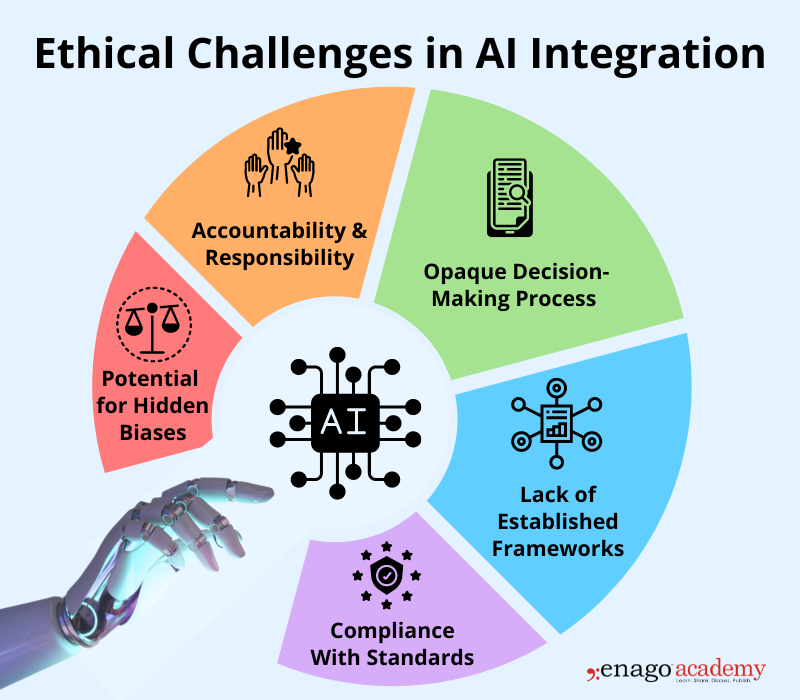

As we navigate the evolving landscape of AI, it is becoming essential to assess how AI could further influence and perhaps complicate the balance between accountability, trust, and oversight within collaborative scientific endeavors. While accelerating the pace of discovery and enhancing the capabilities of scientists, this rapid integration of AI into various facets of research raises complex ethical questions that demand careful examination.

One of the significant ethical repercussions of AI integration lies in the allocation of accountability. With AI systems taking on tasks ranging from data analysis to experimental design, the lines of responsibility can become blurred. In cases of scientific misconduct or errors, determining whether the responsibility rests with the human researchers or the AI algorithms involved can be challenging. This dilemma echoes the Tessier-Lavigne case, where the question of blame became a focal point of debate. Moreover, the issue of transparency is paramount in the context of AI integration. Research conducted using AI algorithms can be intricate and opaque, making it difficult to trace the decision-making processes and identify potential biases, raising questions about the potential for hidden biases or errors. Ensuring transparency in AI-driven research methodologies is vital not only for the integrity of scientific inquiry but also for building trust within the research community and with the broader public.

As AI systems contribute to the collaborative nature of research, it becomes essential to establish ethical guidelines for their use. Similar to how graduate students emphasized the accountability of faculty members for the work done in their labs, researchers employing AI should take responsibility for the ethical use and implications of AI tools.

This does not however absolve the universities from ensuring creation of opportunities for sustainable and ethical integration of AI in research workflows. While many premier universities have ethics institutes and centers for AI research, there is a distinct lack of framework being established that specify what researchers can and cannot do with AI in a clear, black-and-white manner. It is imperative that institutions and regulatory bodies collaborate to create policies that encourage responsible AI integration, fostering a culture where researchers are well trained to understand the ethical considerations of utilizing AI and can therefore be held accountable for their choices; rather than making it the responsibility of individual researchers to enforce a code of conduct under the guide of academic freedom.

AI’s potential to enhance collaboration and interdisciplinary research also holds ethical dimensions. Researchers must grapple with ethical dilemmas arising from collaborations between AI experts and domain-specific researchers, ensuring that the convergence of expertise does not lead to conflicts or oversight of critical ethical considerations, even as basic as the assignment of authorship. This is yet another area where university administrations are best equipped to facilitate conversations, which can avoid or minimize concerns over integrity and improve compliance with university and publishing standards.

Moreover, while much of the conversation is U.S. centric, the opacity and sluggishness of such decision-making and policies can further destabilize the academic structure. For example, while European Code of Conduct for Research Integrity was updated in the early 2023, the entire process took 3 years in-part due to the Covid pandemic and the need for stakeholder inputs. With the rapid pace of AI innovation, such guidelines could be outdated in a matter of months, keeping aside the conversation about a lack of guidance on how individual universities can enforce and execute such recommendation.

So, What’s Next?

It would be futile to under-evaluate the impact of AI on the research and innovation landscape as well as to delay the process of framing policies to nurture an ethical and response approach towards integration of AI. There is an emergent need to take a step back and work together towards assessment and counter-measures rather than debating who’s to blame and who deserves the credit of successful research.

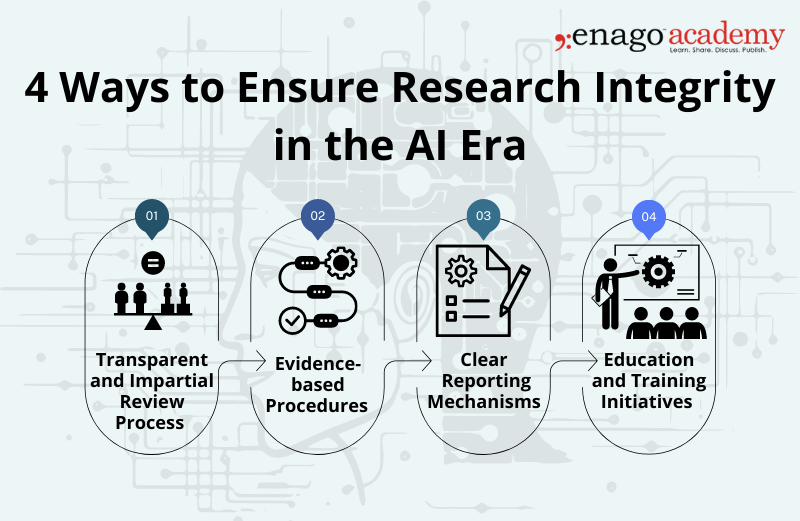

Addressing the issue of maintaining a fair balance of power to determine the outcomes and the assignment of blame for research malpractice and data fabrication is critical. Ensuring an equitable process is not only essential for the integrity of the scientific community but also for upholding the principles of justice and accuracy.

When instances of research misconduct arise, it’s crucial to establish a framework that prevents any undue influence or bias from skewing the investigation and its consequences. This must begin with a transparent and impartial review process that involves individuals with diverse perspectives and expertise without being influenced by the credibility or stature if an author or their affiliated organization. By involving a range of stakeholders, such as independent researchers, ethicists, and institutional representatives, the risk of an imbalance of power is mitigated. This collective approach helps safeguard against personal interests or hierarchical dynamics that could unfairly impact the outcomes. Furthermore, the process of assigning blame must be guided by evidence-based procedures rather than personal assumptions or preconceived notions. An objective analysis of the facts, meticulous documentation, and adherence to established protocols are essential components of a just investigation. This ensures that culpability is determined based on verifiable information rather than subjective interpretations that could increase and continue power imbalances.

Implementing clear and comprehensive assessment and reporting mechanisms for reporting research misconduct also contributes to preventing power imbalances. Whistleblower protections, anonymous reporting options, and independent oversight bodies play a pivotal role in maintaining fairness by allowing individuals to voice concerns without fear of retaliation.

To avoid the ongoing of power imbalances, education and training initiatives are essential. Researchers, institutions, and stakeholders should continuously engage in discussions about ethical practices, the responsible conduct of research, and the potential pitfalls of power dynamics. This will foster a culture of accountability and ensure that everyone involved comprehends their responsibilities in upholding research integrity.

As concurrent lawsuits surround Open AI and multiple external factors dominate the conversations around ethical AI usage, the academic community should have an honest discussion on how to tackle research integrity in the age of AI at every level of stakeholders and they should be given the freedom to do so! It’s time to demand transparency in every step of the research process. Be at the forefront of change. Be part of the change. Allow the change to happen.