Metrics That Matter: Redefining research success beyond impact factors

In the ever-evolving landscape of academic research publication, success has long been measured by the impact factor (IF), a numerical representation of a journal’s influence within the scholarly community. But, do these numbers truly capture the essence of research success? As the academic world continues to transform, it becomes imperative to reassess traditional metrics and broaden our definition of success. The narrow reliance on impact factors to gauge success has come under scrutiny, revealing inherent flaws and pushing scholars to reconsider the metrics that truly matter. In this article, we embark on a journey to explore the metrics that truly matter, transcending the limitations of impact factors and embracing a more holistic approach to evaluating research success.

Perspectives on High Impact Factor Publications Across Career Stages

Embarking on an academic career is akin to entering a competitive arena. The sheer volume of PhDs and the scarcity of academic openings intensify the competition. Once secured, the path to tenure and grants adds another layer of challenges. Central to this journey is the publication game, where the ability to publish in high-IF journals is often seen as the ultimate benchmark of success.

In the contemporary job market, crucial elements such as hiring decisions, promotions, grant funding, and even bonuses are intricately tied to a metric that remains enigmatic to many. Certain universities even dismiss job applications lacking at least one first-author publication in a high-impact journal.

“Job applications at some universities are not even processed until applicants have published at least one paper in a high-impact journal with first authorship. At other institutions tenure is granted when the combined impact factor of the journals in which an applicant’s articles were published reaches a threshold; failure to reach the threshold can influence career advancement.” – Inder M. Verma (former Editor-in-Chief of PNAS)

While the IF was conceived as a tool for broad assessment, its application has evolved into a critical determinant in individual research evaluation, especially for those at the early stages of their careers. For ECRs, the allure of high IF publications is undeniable. These coveted venues promise visibility, recognition, and a potential springboard for career advancement. However, the pressure to secure such publications can be daunting and may lead to strategic decisions that compromise the integrity of the research.

“Pressuring early-career scientists to publish in high-impact multidisciplinary journals may also force them to squeeze their best work into a restrictive publication format (limiting page numbers and graphical elements) that, ironically, can reduce its ultimate impact.” – May R. Berenbaum (Editor-in-Chief of PNAS)

In BMC’s Research in Progress Blog on “Impact Factors and Academic Careers: Insights from a Postdoc Perspective”, Dr. Bryony Graham emphasized that one of the initial actions taken by reviewers assessing grant applications, as highlighted in the blog, involves examining the ‘Publications’ section of the applicant’s CV.

“Regardless of how unrepresentative and misleading impact factors are, my funding and therefore my career depend on them, and that’s that.” – Bryony Graham, Phd

Understanding the Uses and Flaws of Impact Factor: A double-edged sword

The journal IF has its roots in 1955 when Eugene Garfield, a renowned information scientist and the founder of the Institute for Scientific Information (later incorporated into Thomson Reuters), initially formulated the concept of science citation analysis. This concept eventually evolved into the idea of using the “journal impact factor” to assess the relative “importance” of a journal in 1972. This metric aimed to assess a journal’s impact by calculating the average citation rate per published article, dividing the number of times a journal was cited in a given year by the number of articles it published in the preceding two years. Garfield envisioned the JIF as a valuable tool for librarians managing journal collections, scientists prioritizing their reading, editors evaluating journal performance, and science policy analysts identifying new research fronts. Yet, by 1998, in an article titled “From citation indexes to informetrics: Is the tail now wagging the dog?,” Garfield expressed concern over the misuse of his creation. He highlighted the potential for abuse in the evaluation of individual research performance through citations, emphasizing the need for informed use of citation data.

“Ironically, these impact measures have received much greater attention in the literature than the proposed use of citation indexes to retrieve Information. This is undoubtedly due to the frequent use and misuse of citations for the evaluation of individual research performance — a field which suffers from inadequate tools for objective assessment.” – Eugene Garfield (founder of the Institute for Scientific Information)

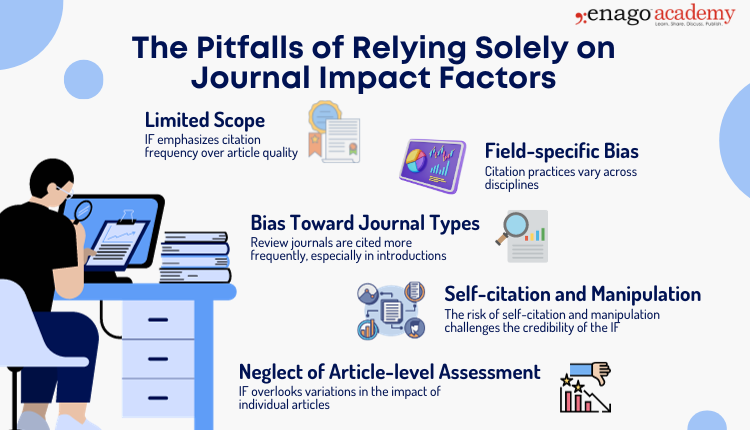

Thus, the IF, while a valuable metric, demands judicious interpretation to avoid ill-informed conclusions. Several key flaws highlight the inadequacy of this metric:

1. Limited Scope:

1. Limited Scope:

IF primarily measures the frequency of citations a journal receives, focusing on quantity rather than the inherent quality of individual articles. This approach can overlook groundbreaking research published in less frequently cited journals.

2. Field-specific Bias:

Cross-disciplinary comparisons are hindered by the significant variations in citation practices across different fields. For instance, the top impact factor within the subject category ‘Dentistry’ stands at 14.9, while in ‘Oncology,’ CA Cancer J Clin boasts a substantial impact factor of 254.7. However, this does not imply that Oncology is truly superior or more significant than Dentistry. Distinct disciplines exhibit varied citation practices, and general journals often hold an advantage over their more specialized counterparts.

3. Bias Toward Journal Types:

IFs exhibit bias toward journals that predominantly publish review articles. These journals tend to be cited more frequently, particularly in manuscripts’ introductions.

4. Self-citation and Manipulation:

The potential for self-citation raises questions about the integrity of the IF. Authors and editors may strategically boost citations, and journals may attempt to manipulate the IF by urging authors to reference articles within the same journal.

5. Neglect of Article-level Assessment:

IF aggregates citations at the journal level, overlooking variations in the impact of individual articles within the same publication. This generalization hinders a nuanced evaluation of research quality.

Nurturing a Holistic Approach for Informed Decision-making

In the quest to reshape academic values, promoting a paradigm shift towards a holistic approach to success and fostering a culture of collaboration over competition emerges as a transformative endeavor. Here are actionable ways to instigate this change:

1. Championing Holistic Metrics

- Diversify Evaluation Criteria: Encourage institutions and funding bodies to adopt multifaceted evaluation criteria that go beyond traditional metrics. Consider the societal impact, altmetrics, public engagement, and the mentorship contributions of researchers alongside publication records.

- Recognizing Diverse Achievements: Establish recognition programs that celebrate diverse academic achievements, including impactful teaching, community engagement, and contributions to interdisciplinary research.

2. Embracing Open-Access Principles

- Incentivize Open Access Publishing: Develop institutional policies that incentivize open access publishing. Acknowledge and reward researchers for contributing to accessible knowledge dissemination, fostering a culture of inclusivity in scholarly communication.

- Supporting Preprints and Alternative Formats: Encourage the dissemination of research through preprints, blogs, and other innovative formats. Embrace diverse forms of communication that broaden the accessibility of research beyond traditional journal publications.

3. Promoting Mentorship and Collaboration

- Mentorship Programs: Establish mentorship programs that connect early career researchers with experienced mentors. Emphasize the value of mentorship in shaping well-rounded researchers who prioritize collaboration and ethical research practices.

- Collaborative Research Initiatives: Encourage collaborative research initiatives that bring together researchers from diverse backgrounds and disciplines. Foster an environment where collaboration is celebrated as a cornerstone of academic success.

4. Cultivating Inclusive Research Environments

- Inclusive Funding Opportunities: Advocate for funding opportunities that prioritize collaborative and interdisciplinary research projects. Support initiatives that promote diversity and inclusivity in research teams.

- Community-Building Events: Organize events, workshops, and seminars that facilitate networking and collaboration among researchers. Create spaces for interdisciplinary dialogue and knowledge exchange.

5. Educational Initiatives

- Integrate Holistic Success in Education: Incorporate discussions on holistic success, ethics, and collaborative practices into academic curricula. Equip students with a broader understanding of success beyond traditional metrics.

- Publicize Success Stories: Share success stories that highlight researchers who have excelled through collaboration, mentorship, and contributions to open-access initiatives. Showcase diverse paths to academic success.

Conclusion: Rethinking the numerical norms

The pressure on authors to submit papers to high IF journals persists. As the academic community navigates these complexities, a reevaluation of the metrics that truly matter becomes imperative. While the Impact Factor remains a significant metric, it should not be the sole determinant of research quality or individual worth. A holistic evaluation, considering peer review, productivity, and subject specialty citation rates, is essential for a fair and comprehensive assessment of scholarly contributions. As the scholarly landscape evolves, so should our metrics and approaches, fostering a culture that values collaboration over competition. The metrics that matter should encompass a broader spectrum, considering peer review, productivity, and subject specialty citation rates alongside impact factors. In doing so, we pave the way for a more inclusive and nuanced evaluation of research success, ensuring that our scholarly endeavors contribute meaningfully to the advancement of knowledge and the betterment of society. If you’ve encountered similar experiences or have thoughts on redefining research success metrics, we invite you to share your insights in the comment section below.

Frequently Asked Questions

The impact factor (IF) is a metric that reflects the frequency with which the average article in a journal has been cited in a particular year. It is calculated by dividing the number of citations in the current year to articles published in the previous two years by the total number of articles published in the previous two years.

The impact factor (IF) is a widely used metric that quantifies the frequency with which articles in a journal are cited in a particular period. However, its application has notable limitations. Firstly, the IF's focus on citation frequency at the journal level can overshadow the quality of individual articles, potentially neglecting groundbreaking research in less frequently cited journals. Moreover, cross-disciplinary comparisons are complicated by variations in citation practices among different fields. The bias toward journals that primarily publish review articles and the potential for self-citation raise concerns about the metric's integrity. Recognizing these limitations is crucial for a more nuanced and critical interpretation of impact factor data.

To find the impact factor of a journal, you can typically refer to the journal's official website or access it through academic databases. Most journals prominently display their impact factor on their website. Alternatively, you can use reputable databases like Journal Citation Reports (JCR), which is available through platforms like Web of Science. JCR provides comprehensive information on journal impact factors, citation data, and other bibliometric indicators. Keep in mind that impact factors are usually released annually, so ensure you are accessing the most recent data for an accurate representation of a journal's impact in the academic community.

Altmetrics, encompassing online attention on platforms like social media and news outlets, offer insights into the broader societal influence of research. Citation count, providing a direct measure of how often an article is referenced, contributes a nuanced perspective on influence. The H-Index, combining an author's publication volume and citation impact, presents a holistic view of their scholarly contributions. Journal reputation within a specific field adds another layer to the assessment, capturing the qualitative aspects of research impact. Evaluating open-access metrics, such as download statistics and online engagement, sheds light on the accessibility and reach of research. Considering collaboration and network metrics recognizes the collaborative nature of impactful research. Metrics like reader engagement, grants, and awards further enrich the evaluation process, offering a diversified and thorough approach to gauging research success.

Alternative research metrics, commonly known as altmetrics, represent a diverse set of indicators that extend beyond traditional citation-based measures to assess the impact and visibility of scholarly work. These metrics encompass various online activities and engagements, such as mentions in social media, news articles, blog posts, and online forums. Altmetrics aim to capture the broader influence of research within the digital landscape, reflecting how scholarly output resonates with diverse audiences. By considering alternative research metrics, individuals can gain insights into the societal and cultural impact of academic work, complementing traditional citation-based assessments.