Why ChatGPT Alone Is Not Enough? The Importance of Human Inputs in Research and Publishing

ChatGPT is hyped as a game-changer in the field of research and publishing. But beyond its ability to generate content quickly, can ChatGPT or other AI tools replace humans? No!

ChatGPT is not a magic bullet that can solve all of our problems. While it has the potential of making certain tasks easier and efficient, it can’t completely replace human intuition and expertise.

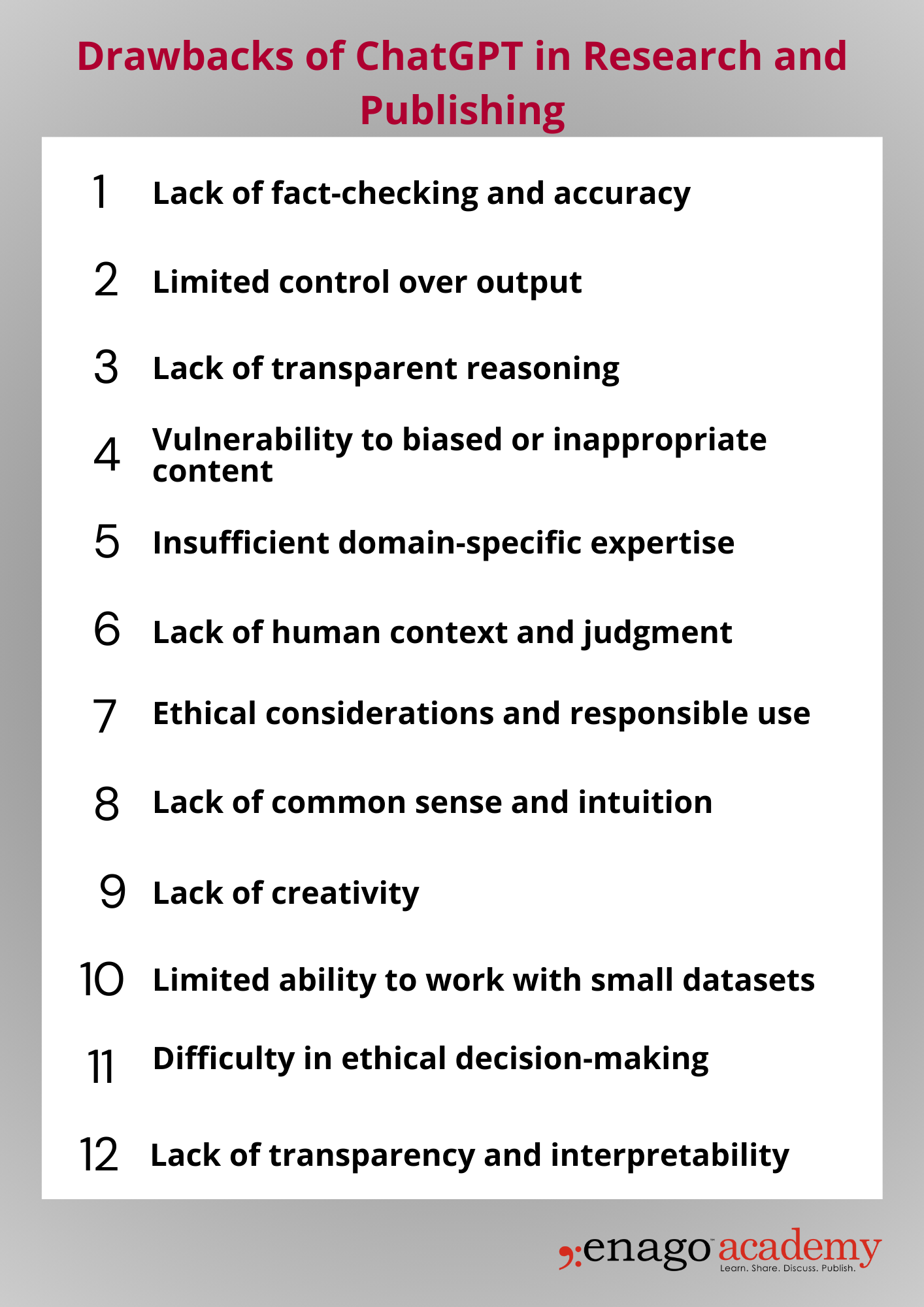

Drawbacks of Using ChatGPT in Research and Publishing

The use of ChatGPT in research and publishing has several limitations, which include the following:

The use of ChatGPT in research and publishing has several limitations, which include the following:

1. Lack of fact-checking and accuracy

ChatGPT generates responses based on patterns it has learned from training data, but it does not possess inherent knowledge or the ability to fact-check information. This can lead to inaccuracies, especially when it comes to up-to-date or specialized information. Researchers relying solely on ChatGPT may encounter difficulties in verifying the validity and accuracy of the information provided.

2. Limited control over output

ChatGPT operates as a generative model and can produce outputs that are creative and varied. While this can be advantageous in certain scenarios, it can pose challenges in academic research and publishing where precision and control over the output are crucial. ChatGPT may generate responses that are speculative, off-topic, or lack the necessary level of detail required for scholarly work.

3. Lack of transparent reasoning

ChatGPT does not explicitly explain the reasoning behind its answers. This lack of transparency can be problematic when attempting to understand the underlying logic or justifications for particular conclusions or claims. Researchers and readers may find it challenging to evaluate the reliability and validity of the information provided by ChatGPT.

4. Vulnerability to biased or inappropriate content

ChatGPT learns from the vast amount of text available on the internet, which includes both reliable and unreliable sources. Consequently, the model may inadvertently reproduce biased or inappropriate content present in the training data. This limitation can undermine the objectivity and integrity of academic research and publishing, potentially leading to the dissemination of inaccurate or biased information.

5. Insufficient domain-specific expertise

ChatGPT’s knowledge is limited to what it has learned from its training data. Consequently, when dealing with complex or specialized subject matters, the model’s responses may lack the depth of understanding and domain-specific expertise required for rigorous academic research. Researchers may need to consult additional resources or domain experts to complement the limitations of ChatGPT.

6. Lack of human context and judgment

ChatGPT lacks the ability to understand nuanced contextual cues or interpret the intent behind a query fully. This limitation can result in responses that may not adequately address the underlying question or fail to consider important contextual factors. Academic research often requires human judgment and critical thinking, which ChatGPT may not possess to the same extent.

7. Ethical considerations and responsible use

The use of ChatGPT in academic research and publishing raises ethical considerations related to authorship, plagiarism, and responsible use of AI-generated content. It is essential for researchers to properly acknowledge and attribute the use of AI-generated text to maintain academic integrity. Additionally, researchers must adhere to ethical guidelines and ensure that the outputs generated by ChatGPT align with ethical norms and principles.

8. Lack of common sense and intuition

ChatGPT is not capable of understanding and using common sense or intuition in decision-making. It lacks the ability of taking into account the context when making decisions.

9. Lack of creativity

ChatGPT cannot replicate human creativity and originality in generating new ideas, hypotheses, or research questions. It is not equipped to deal with unexpected situations that demand creative problem-solving.

10. Limited ability to work with small datasets

ChatGPT requires large amounts of data to be trained on, which can be a challenge in fields where data is scarce or difficult to obtain. This can limit the applicability of ChatGPT and other AI tools in certain fields. For example, an AI system used to diagnose medical conditions may struggle to diagnose a rare disease that does not fit the typical patterns found in the training data.

11. Difficulty in ethical decision-making

ChatGPT struggles to make ethical decisions that require a nuanced understanding of values and principles. Data abuse is another example of ethical concerns related to ChatGPT and other AI-based applications. ChatGPT may require access to sensitive data, which raises concerns about privacy and data security. If this data is not properly secured, it can be vulnerable to theft or misuse.

12. Lack of transparency and interpretability

Lack of transparency can lead to a lack of ownership for decisions made by ChatGPT and other AI systems. For instance, authors often may not realize that ultimately they bear the responsibility for the content of their work, even if ChatGPT or other AI tools assist them in creating it.

Therefore, to use ChatGPT in research and publishing ethically, it is crucial to recognize and address ethical concerns and biases.

The Indispensable Role of Humans in Research and Publishing

The value of human involvement in the academic environment cannot be emphasized. While ChatGPT can be educated on massive datasets, it lacks the depth of understanding that humans with years of actual experience have. Human involvement is critical for jobs requiring this level of topic knowledge. Human contribution is also essential in joint research initiatives.

Collaboration among researchers from various fields throughout the world can lead to fresh insights and achievements that would not be feasible with ChatGPT alone. Effective collaboration allows researchers to bring different perspectives and expertise to a problem, leading to more innovative and impactful research.

Moreover, humans can take into account a wide range of factors when making decisions, including ethical considerations, social dynamics, and personal experience. While ChatGPT can automate certain tasks, it cannot make ethical judgments or ensure that research is conducted in a way that is respectful of human subjects and other ethical considerations. Human input is necessary to ensure that research is conducted in a way that is ethical and responsible.

There are certain things that ChatGPT cannot do but humans can. Researchers need to be aware of these limitations and the unique abilities that only humans possess. By working in conjunction with ChatGPT and other AI tools, researchers can maximize the benefits of both human intelligence and artificial intelligence to advance the field of research.

Have you ever used ChatGPT or other AI-based tools for your research? Let us know in the comments section below the challenges you faced and the strategies you followed! Also, you can use #AskEnago and tag @EnagoAcademy on Twitter, Facebook, and Quora to reach out to us. We would be happy to discuss further and provide additional insights.